Disinformation War: Engaging Content that Divides Us

- Nikoloz Gachechiladze

- Mar 31, 2022

- 5 min read

Updated: Apr 29, 2022

Disinformation is playing an ever-growing role in the political and geopolitical events of the 21st century. Frequently used by the Russian Federation, it became a crucial part of the war that it wages with the west. Through disinformation, it incites conflict, confusion, disorients opposing people, and seeks justification for unjustifiable actions.

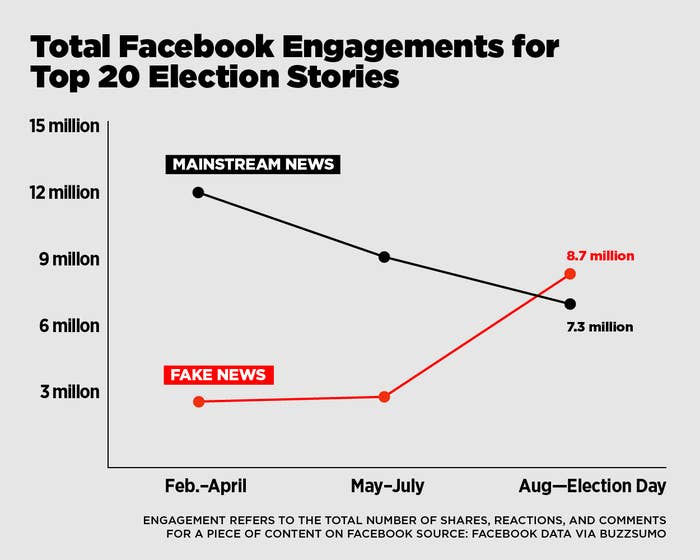

Disinformation can often be more polarizing and cause more engagement than "real" news, this is why it can be cheaper to use it as a method of information war than reason or logic. Just like fearmongering in media, some channels do it because it tends to increase watch time, and can often increase engagement on social platforms.

The use of disinformation tactics is so prevalent that they can even affect elections in a country like U.S. and influence daily political lives, to an extent that riots and civil aggression events are incited. These events are documented to have been organized by foreign accounts, far away from American borders.

It is scary to see that (as the popular chart here presents) on election day, people could have more disinformation on their screens than real content, and unfortunately, that can often be the case, since algorithms value engagement more than truth and disinformation bombs can have more engagement, which in terms results in more visibility on platforms.

Subject

What does the war in Ukraine teach us in terms of fighting disinformation? Ukrainian resistance in this war has been awe-inspiring, and the way I see it, the underlying drive for it is inspirational leadership, united people, and individual capacity to act. In the context of disinformation, I will be focusing on the latter. Given that individuals are prepared to act to defend their country, what really stalled and frightened Russian invaders was the improved capacity of each soldier. With the high supply of anti-tank and anti-aircraft weaponry, each soldier became dangerous for a tank. In fact, a partisan battalion equipped with modern armor-penetrating weaponry became the worst nightmare for an armored convoy.

Of course, there are many intricacies to war and strategy, but this is the key improvement in the capacity that is crucial in the context of a disinformation war which will be discussed in more detail below.

How Does Disinformation Spread?

Disinformation war is like a virus, in the case of a successful campaign, it penetrates the system without any clearly visible signs, and infects it until it starts causing a range of immune responses. Only after the immune system becomes aware of the infection it can produce antibodies to fight it. A key aspect of a disinformation war is blurring the fabric of reality, mixing truth with disinformation in a way that people eventually find it hard to understand what is real and what is fake and the virus is masked in a psyche, slowly leading reasonable people to believe in ideas that could otherwise be classified as insane. In the spirit of the analogy, it is blinding the immune system so that it does not notice a problem and start fighting it. It often does this by hijacking different thinking modes.

For disinformation content to be effective it must first hijack a brain so that it leaves logical reasoning mode, and it does it through content that triggers strong emotions. Content that amuses (memes), shocks (graphic images, unprecedented events), or scares (fearmongering, the threat of war) is usually used as a gateway and makes people more susceptible to disinformation and they are more likely to instantly interact with the content (like, share, comment) without the second thought of checking the validity.

The element of sharing a shocking story first comes into play as well and as a result, people spread headlines such as these, often without even reading the story and the unfortunate outcomes happen. This would be a form of misinformation from the user's point of view, but from the point of creator it was meant to mislead and has a malicious intent, therefore, it can be classified as disinformation. The initial aspect of fighting a disinformation campaign is identifying that it exists.

When a potential victim steps a foot inside the disinformation swamp, it swallows them and slowly isolates them into an eco-chamber.

Social media makes content similar to the content you are engaged with more visible for you, all so that you spend more time on the platform, and this initial drop of interest can transform into a syringe of similar content, that further enforces existing beliefs to eventual extreme beliefs. An additional element of self-policing comes in later and it further prevents the existence of contrary beliefs. In groups with causes, usually centered beliefs are marginalized, and more extreme allegiances to the group beliefs are either supported or not fought, which extremizes the initial cause. This is the gist of misinformation eco-chambers, that actors can weaponize against each other.

After the identification of the virus, the stage of fighting begins, and this is where, just like in a physical war, the capacity to target and neutralize disinformation bombs really matters.

How to deal with disinformation Campaigns?

This question could be answered with a counter - can disinformation campaigns be dealt with? and the answer to that question is contextual. All disinformation campaigns can reach a degree of success before they hit the radar and are neutralized. For now, all possible solutions to the problem are boring. There are companies working on AI systems for disinformation detection for different sets of events for example COVID/Vaccination disinformation, Election, etc, but a generalized AI system for the detection is quite difficult to make. Interpretation of a satiric, or sarcastic message is difficult therefore at this point a human fact-checker is required to read between the lines. With the volume of data generated by each platform (Facebook - 4 petabytes of data daily), it is unfeasible for fact-checkers to go through all of it.

Therefore, for now, the only solution platforms have is to reduce the volume of checkable content to reported and flagged posts/accounts, and this is why we arrive at the boring solution of individual reporting. If nobody reports and flags content facebook fact-checkers and third-party fact-checkers are outmatched by the sheer amount of content that is produced, so they can not successfully screen out malicious content. There is also a negative incentive for the platforms to keep the fake news as it generates more engagement=more people spending time on the platfrom=more overall ads revenue for the company. But let's ignore that and assume that all social media companies are honestly trying to clean up their platform from potentially harmful content.

Another potential solution could be a new checkmark. Just like the blue checkmark, Facebook/Meta could come out with a product specifically for large media publishing companies let's say a green checkmark, large media publishers who already have a blue checkmark can opt-in to use this service and pay a fee for Facebook verified fact-checkers who make sure that each informational post does not have malicious intent, and if the information is verifiable, allow for the post to have a checkmark. If it is not verifiable or is misleading, it would not receive the checkmark. If you like the idea, you could join the petition below

This could be a good alternative considering that less than 5% of false posts were removed after being reported as fake according to a 2020 report from the Center for Countering Digital Hate (CCDH). Therefore, although mass disinformation reporting solution is the most immediate, currently there are still inefficiencies on platforms' sides.

To keep up with the initiative, follow the page: Fact Check Media on Facebook This concept is far from perfect so everyones input and support will be strongly appreciated.

Comments